Service File Configurations in Pega

It is recommended to visit the previous blog article on Parse delimited rule before proceeding further

In the Parse delimited article, we learned how to parse the input data using delimited characters.

Here is our own view on file processing in Pega

We can say, this is the common architecture for file processing irrespective of technologies.

Mostly, the two main actions are

- Listen to the file

- Service the file

In this article, we will concentrate only on servicing the file.

Pega gives us a very simple rule to service a file – Service-File. All we have to do is configure the file

What is a service file rule?

Enjoying this content?

Access the author’s full video courses here at MyKnowAcademy.

Explore Courses →

– Is used to service or process the input file.

– Service or processing involves importing the data from external system files into Pega system and executing different operations like create a case, update case, objects ( any type of processing based on requirements)

Where do you reference a service file rule?

File listener rules

File listener comes at the first part of file processing. It listens the file and invoke service file rules for processing.

What are the configuration points in service file rule?

I am going to explain it in a different way

I give you a file, say an Excel file and ask you to process the file. Can you do it straight away?

No, You will be firing arrows of questions to me.

Question 1: What to do with the data?

Question 2: how to recognize the data? Should I process each row individually or process the complete data (file) at once.

Question 3: How do I map (identify) the individual data?

Question 4: Do I need to perform any pre or post-actions before start processing the data?

All these questions need to be answered before configuring a service file rule.

Let’s go and create a new service file

First, the business requirement ( I will use the same requirement in the coming posts). External system sends us the input file with list of customer details. For each record in the file, you need to create a new Sales case with the details provided.

Below is the sample file

I need to create 3 cases for the three customers – Prem, Raja & Sandosh

Simple requirement!!

How to create a new service file rule?

You can create a new service file in 2 ways

- Using the service wizard. ( We will see this in another article)

- Manual creation.

Step 1: Create a new service file Records -> Integration services -> Service File -> Create

Step 2: Fill out the Create form

Customer package name – specify the service package, you need to use.

For more details on service package, please visit one of my favourite article.

Customer class name – specify a name to group the related services.

Note: Do not confuse the customer class with pega class rule. Customer class is not required to be pega class

Create and open. There are main tabs in a service file rule

a) Service

b) Method

c) Request

Service tab

You got 2 blocks in service tab

- Primary page settings

- Processing options

Primary page settings

You can specify the primary page and the corresponding class for the service file rule

Page name – You know that all rules run under a page context, we say primary page. For the service-file rule, you can specify a primary page. You can always use the default primary page MyServicePage

Primary page class – You can specify the class of the primary page. This class determines the rule resolution for the activities used in the service file rule

In this tutorial, We are going to create sales cases with the input provided. So I decided my primary page class to be my sales class.

Data transform – You can specify any data transform to run as soon as the page is created. This can be used to initialize values for processing. I am not using it in my requirement.

Now we completed the primary page configurations. The next block is about Processing options.

Processing Options

Here you can decide the processing mode – synchronous or asynchronous method.

Execution mode –

You got 3 options

a) Execute synchronously – this is the default configuration, where the service processing runs a soon as the request is received

Other 2 options belong to asynchronous processing

b) Execute asynchronously (queue for execution) – It creates a service request in queue for back ground processing. There is a specific pega rule ‘service request processor’ responsible to process this asynchronously.

Note: Asynchronous mode can be used for other services as well (REST , SOAP etc)

c) Execute asynchronously (queue for agent) – It creates a service request in queue for an agent to pick up and process at the backend.

Why do you want to use asynchronous processing?

1. Error handling – there may be a situation, where you need to update some cases with the given input file. So, what happens when the case is locked and you cannot process at that moment. In such cases, you can use asynchronous processing

2. Response is not required at the moment – I hope, I discussed it in SOAP and REST posts. When the service consumers (external applications) do not need the service response at the moment, then we can perform asynchronous processing

3. Performance may be – When there is a high load of load, you can always decide to do theprocessing at the backend.

Note: This is not the only way to improve the performance of file processing. You can also use multithreading or parse and save the data in a database table synchronously, so that agent can act on those records at the backend. It is all upto you to decide on the design!!

Definitely I need to make a separate post on asynchronous processing!!

For now, let’s stick to the default synchronous processing.

Other 3 fields in the processing options are related to asynchronous processing.

Request processor – This is related to “Execute asynchronously (queue for execution)” mode. You need to specify the Service request processor rule for backend processing

Maximum size of the file to queue – Self-explanatory. This is applicable when the processing method is file at a time. We will see it later. Specify only when required, else leave it blank

Requests per queue item – You can specify, how many records you can put into the queue items.

For example: say you need to process 1000 rows individually in a file. You can use this field to specify 100 rows can be processed in a single queue, so that 10 queue items will be created.

For now, we use synchronous processing, so leave the other configurations to default!

We have successfully configured the Service method. Let’s switch our focus to Method tab.

Method tab

You have 4 blocks in the method tab

- Processing options

- Record layout

- Record type identifier

- Data description

Processing options

This helps to identify the options to process the data in the file.

Processing method – includes 3 options

The required input data can be of three methods

a) File at a time – It means the entire content in the input file serves as a single input data.

For example – Say the external system provides us with a chunk of XML attributes in a single file and we have to service the file by creating a new case from the provided input. Here each input file contributes to individual input data. In such a case, we can use file at a time and process the entire data in the file at once

b) Record at a time – It means each record can be considered as a single input.

For example – the input file contains rows of customer data and for each record, we need to create a new case.

c) By record type – By record type, we can process differently for different rows of data.

Say for example, below is the input data we receive from the external system.

– You see the first row starts with 10 – specifies the header. Do you need to create cases for header data?? No!!

– The next 3 rows start with 11 – specifies the customer data. For the record type 11, the external system wants us to create sales cases for the customer

– The next row starts with 12 – specifies the customer data. For the record type 12, the external system wants us to create service case for the customer ( but not our requirement!)

– The last row starts with 99 –specifies the footer. We should not create any case for footer data.

Hope you are clear now. The first column corresponds to record type

Note: The choice always depend on external system input file format

In our tutorial, I am using “by record type”

I will just move to the last block

Data description

This describes the data mode and the encoding type

Data mode – It can be either text only or binary only.

You can process the input record as either Java character string or Java byte string.

We know that the characters we enter are stored as binary.

Text only – the input data is treated as a Java character string and processed

Binary only – the input will be processed as a binary data string. It means the data will be encoded.

I haven’t touched the parse structured rules (not the parse delimited we saw before), where you can parse the input data using binary.

Character encoding

What is character encoding?

– Characters are stored in the computer/database as bytes.

– Character encoding helps to unlock the code. It is the mapping between the bytes stored in the computer to the character. Without character encoding, the data will look like garbage

For more details, you can google about character encoding.

https://www.w3.org/International/questions/qa-what-is-encoding

– There are many character encoding standards like ASCII, UTF-8, UTF-16 etc

So, here we can specify the character encoding that corresponds to the input file.

You can see a list of available character encoding. If the data is already in your system, you can use default.

Note: The character encoding is decided from external system input file. The character encoding of every system can be configured in JVM.

For now, keep it simple. If you need to learn more, try to browse and find out about character encoding.

I have selected the below configuration in the data description block.

Record layout

This decides or marks the end each record in the input file. It is used only with the Record at a time and by record type methods

Record terminator – you can specify any characters or escaping sequences like n, t etc. This will mark the end of the record.

Note: line terminators vary for different input files! ( n, r)

Record length – You can specify the length in characters or byte count based on text mode.

When to use it?

– Only when processing method is record at a time and by record type.

– When you are not using record terminator

– Do not use with processing method ‘by record type’ and if the length varies per record type

For now, I am going to use only record terminator asr n for .csv file use this as record terminator

And final block in this tab

Record type identifier

I would like to revisit the example we saw before.

Offset – it decides the position at which the record type indicator starts. In our case, the record type values are 10, 11 and 99. It always starts with the first position and in java the first position is always 0

Also, I am using character text mode, so the offset is 0.

Length – It decides the length of the record type value. In our case, it is 2 – character count

Note: For binary data, use binary count for offset and length

Here is my final configuration on the record type identifier.

We are done with the second tab. Now the final tab, where the actual processing takes place

Request tab

It again contains four blocks

a) Processing prolog

Here you can specify the pre-activity. It certain requirements you may need to use this pre-activity. If not required, you can leave it empty. I am leaving it empty

b) Processing epilog

Here you can specify the post-processing activity. You can use this based on the requirement. If not leave it empty

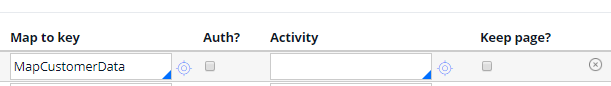

c) Parse segments

Here is where the actual parsing and processing takes place.

Record type – Use this only when the processing method is of type ‘by record type’. In our case yes!

So again, reminding you of the requirement input file.

I am going to use 3 rows for three record types 10, 11 and 99.

Once –

If true – It will process only one row. For header and footer record type (10 & 99) you can select the checkbox because header and footer will always be only one row.

If false – It will continue to process multiple rows, imagine like loop. In our case for record type 11 we may need to process multiple rows (3 customers in our example), so keep it unchecked.

Map to –

You get a variety of options to parse and map the data. In this example, I am going to use the parse delimited rule, I created earlier.

Parse delimited rule

Here I have used 4 fields, which I get from the input file – RecordType, CustomerName, Age and Sex

For more details on creating parse delimited rule, please visit my previous article.

Map to Key – Specify the key to Map to field. Since I selected parse delimited rule, I need to specify the rule name. Based on your Map to selection, the choice varies

Auth? –

– In most cases, your service package will be authenticated so it not required.

– If you really want to use these, then the system expects a valid requestor credentials in the two parameters pyServiceUser and pyServicePassword in the parameter page

Leave it unchecked.

Activity – you can specify the main activity that can use the parse data and perform the required operation.

I am going to create and use an activity in my tutorial post!

Keep page? – If checked, the primary page (MyServicePage in our case) of the row processing will be retained. In some cases, you may need the data parsed from the previous row. In our case, keep it unchecked so that every time our primary page will be reinitialized.

Now the last block

Checkpoint processing

This block corresponds to commit and also error handling. It decides when to commit the database changes and when to rollback if error occurs

This is introduced recently as an enhancement item.

Frequency

– Say your file contains 1000 records and you don’t want to commit your database changes only after processing all 1000 records. In such a case, you can specify the frequency as 100. So that after every 100 records got processed, then commit operation will be performed.

– If left blank, no automatic commit operation is performed.

Success criteria

– You can use a when rule that checks the condition for every record processing.

– Say for example, on 151th record, if the when rule fails, then the rollback occurs and backs out to the most recent commit position (maybe 100). File processing will be stopped.

– Use it only when required.

Hope you got a detailed explanation of each individual field in the service file rule.